For over a decade, adding noindex directives on your robots.text file has been supported by Google. However, Google is starting to make changes on some of the unsupported directives on robots.txt file, this includes the noindex directives.

According to Google, effective 1 September 2019 it will stop supporting all codes that handle unsupported and unpublished rules in robots.txt. And noindex directives are part of these unsupported rules.

The announcement was made on Google’s Webmaster blog. Learn more about this below.

What is noindex directive?

A noindex directive on website pages means that the pages won’t be indexed and therefore won’t appear in search results. By using noindex directive it helps to optimise crawl efficiency since it will prevent the page from showing on search results.

How does this affect your SEO?

According to Google, retiring all code with unsupported and unpublished rules like the noindex directive is a move to maintain a healthy ecosystem. Moreover, the move also aims to prepare for potential future open source releases.

For those who heavily rely on noindex directive and will be greatly affected by this announcement, Google said that there is no need to worry. There are still other alternatives out there that they can use.

What are the alternatives?

If you rely on using noindex directive, Google suggested these options that are posted on their official blog post. Check them out and see what’s best apply to you.

(1) Noindex in robots meta tags

This is one of the most effective ways to remove URLs on the index but still supports crawling. This is applicable in both HTTP response headers as well as in HTML.

(2) 404 and 410 HTTP status codes

These status codes help search engines to know that the page no longer exists. When Google crawls and processes pages with these status codes, Google will drop pages with such URLs.

(3) Password protection

If you want to remove a page on Google index, you can simply hide the page using a login. However, if a markup is used to indicate subscription or paywalled content, then this won’t work.

(4) Disallow in robots.txt

If you don’t want search engine to index a certain page, then you can block the page from being crawled. If the page can’t be crawled then it means it can’t be indexed. However, even though the page is blocked, search engines can still index it if the URL came from other locations or pages, but Google will aim at making it less visible in its search result in the future.

(5) Search Console Remove URL tool

One of the easiest and most effective alternatives for noindex directive is using Google’s Search Console Remove URL. Using this tool is very easy. And it is a very quick but temporary method in removing URL from Google’s search results. So if you want the easiest way to hide a website page from Google’s search engine, then the Search Console Remove URL tool is for you.

Alongside the announcement regarding the noindex directive, Google is also focus on making this standard. Google is now working on making the robots exclusion protocol a standard. This is the first change that everyone can expect coming in. In line with this, Google already released its robots.txt parser as an open source project.

For years, Google has been working to make this change and to make it a standard protocol. And as they finalize it, Google can now finally move forward.

According to Google these kinds of unsupported and unpublished implementations hurt websites’ presence in Google’s search results.

How does this affect you and why you should care? The thing you need to know about this announcement is to stop using the no index directive in the robots.txt file. If you can’t do that use the alternative options Google recommended.

This update is just one of the many updates and changes to the robots.txt protocol. Google will publish more information about this change and update on its Google Developer’s Blog.

Hope you find this useful and make sure to stay updated with the many changes and Google updates that are soon to come.

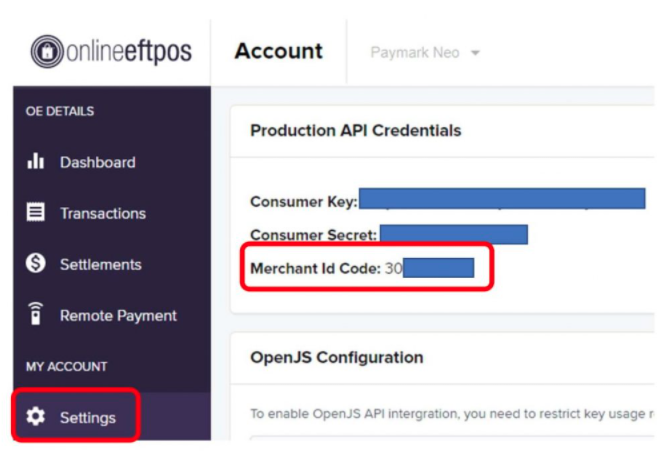

How YHP Can Help You

As an expert in the industry, YHP can help you analyze your web pages to see if you have noindex directive protocol on your robots.txt file. If you do, we can apply the alternatives that Google recommends to make sure that your pages that should not be indexed and crawled are documented and applies Google’s standard. Also, in line with this, we can help you with your SEO strategy to make sure that the right pages on your website are showing on the first pages of Google’s search result. If you need help with your SEO strategy, let YHP help you. Call us on 0800 WEB DESIGN.